Table of Contents

- Introduction:

- Cache Memory

- Characteristics of Cache Memory;

- Speed; Cache memory operates at an incredibly fast pace, enabling quick data retrieval by the CPU.

- Proximity; It is positioned near the CPU for minimal travel time when fetching data.

- Data storage; It stores data that is often used to make operations faster.

- Hierarchy; There are usually multiple levels (L1, L2, L3) to balance the speed and capacity of the system.

- Cache hits and misses; It determines whether the data is found in the cache (hit) or not (miss).

- Coherency; It ensures that the data remains consistent with the main memory, particularly in systems with multiple processors.

- Cache memory’s role is to make your computer faster by keeping frequently needed data close at hand.

- Cache Performance

- To enhance computer performance, cache memory plays a vital role by reducing the time it takes for the CPU to access frequently used data. This is achieved by storing copies of such data in a high speed memory that is located close to the CPU. As a result, the CPU can quickly retrieve this data, minimizing the need to access slower main memory (RAM). Ultimately, this leads to faster program execution, smoother multitasking and an overall improvement in system responsiveness.

- Cache Mapping

- Direct Mapping;

- In direct mapping, each block of main memory can only be placed in a specific location within the cache.

- It utilizes a modulo function to determine which cache slot corresponds to a particular memory block.

- While direct mapping is simple and efficient, it may encounter issues such as cache conflicts when multiple memory blocks map to the same cache slot (known as cache collisions).

- The performance of direct mapping directly depends on its hit ratio.

- i = j modulo m

- where

- i = cache line number

- j = main memory block number

- Set Associative Mapping;

- With set associative mapping, each cache slot can accommodate a small group of memory blocks, which is more than one but less than the total number of slots. This approach strikes a balance between the straightforwardness of direct mapping and the adaptability of fully associative mapping. It helps mitigate cache conflicts compared to direct mapping, although there may still be occasional conflicts depending on the size of the set.

- Relationships in the Set-Associative Mapping can be defined as:

- m = v * k

- i= j mod v

- where

- i = cache set number

- j = main memory block number

- v = number of sets

- m = number of lines in the cache number of sets

- k = number of lines in each set

- Fully Associative Mapping;

- In fully associative mapping, any memory block can be placed in any cache slot. This approach offers maximum flexibility, minimizing conflicts within the cache as any slot can accommodate any data block. However, it is more complex to implement and manage compared to direct and set associative mapping methods.

- Cache mapping plays a crucial role in optimizing cache performance as it determines how efficiently cache memory is utilized. The choice of mapping technique impacts cache hit rates, access times and overall system performance. The ultimate goal is to find a balance between simplicity and cache efficiency to ensure that frequently accessed data is readily available in the cache, reducing the need for slower main memory access.

- Applications of Cache Memory;

- Cache memory finds wide applications across various computing systems to enhance performance by accelerating data access. Some key applications of cache memory include;

- Processor Caches; Enhancing CPU performance by storing frequently used instructions and data, thereby reducing memory access times.

- Web Browsers; Caching frequently visited web pages and their associated assets to expedite website loading.

- Database Systems; Caching frequently accessed database records to reduce query response times.

- Operating Systems; Caching recently used files and program code to improve overall system responsiveness.

- Graphics Processing; In video games and 3D applications, textures and graphics data are stored to enhance rendering speed.

- Network Devices; Frequently requested network data is cached to minimize delays and enhance data transmission efficiency.

- Storage Devices; Cache is utilized in hard drives and solid state drives (SSDs) to boost the speed of read and write operations.

- Cache memory functions as a rapid buffer between the CPU or user and the slower main memory or storage.

- Advantages of Cache Memory;

- Disadvantages of Cache Memory;

- Conclusion;

- In today’s rapidly advancing world of computing, cache memory remains an essential component for achieving efficiency. It acts as a bridge between the lightning fast CPU and the relatively slower main memory, allowing your computer to operate at lightning speed even when handling resource intensive applications. As technology continues to progress, cache memory keeps up with the demands of modern computing while remaining mostly unnoticed by end users. This showcases the intricate design and innovation behind our digital experiences, making them smoother and more responsive than ever before.

Introduction:

Cache memory plays a crucial role in modern computer systems, acting as a behind the scenes hero that significantly boosts overall performance. Think of it as a super fast and responsive memory bank that stores frequently used data and instructions, placing them right at the fingertips of the central processing unit (CPU). It’s like having a librarian who knows your reading preferences and keeps the most borrowed books within arm’s reach. This smart arrangement reduces the time it takes to access memory, allowing your computer to perform tasks swiftly and seamlessly.

Cache Memory

Cache memory, often called “cache,” is a type of high speed volatile computer memory that provides fast data access to a processor and stores commonly used computer programs, applications and data. It’s an essential part of a computer’s memory hierarchy, sitting between the central processing unit (CPU) and the main system memory (RAM). The main purpose of cache memory is to enhance computer system performance by reducing the time needed to access frequently used data.

Characteristics of Cache Memory;

Speed; Cache memory operates at an incredibly fast pace, enabling quick data retrieval by the CPU.

Proximity; It is positioned near the CPU for minimal travel time when fetching data.

Data storage; It stores data that is often used to make operations faster.

Hierarchy; There are usually multiple levels (L1, L2, L3) to balance the speed and capacity of the system.

Cache hits and misses; It determines whether the data is found in the cache (hit) or not (miss).

Coherency; It ensures that the data remains consistent with the main memory, particularly in systems with multiple processors.

Cache memory’s role is to make your computer faster by keeping frequently needed data close at hand.

Cache Performance

To enhance computer performance, cache memory plays a vital role by reducing the time it takes for the CPU to access frequently used data. This is achieved by storing copies of such data in a high speed memory that is located close to the CPU. As a result, the CPU can quickly retrieve this data, minimizing the need to access slower main memory (RAM). Ultimately, this leads to faster program execution, smoother multitasking and an overall improvement in system responsiveness.

Cache Mapping

In cache memory terms, cache mapping refers to the technique used for determining where data from main memory (RAM) should be placed or mapped into the cache. Various cache mapping methods exist, each with its own advantages and trade offs. Here are three commonly used approaches;

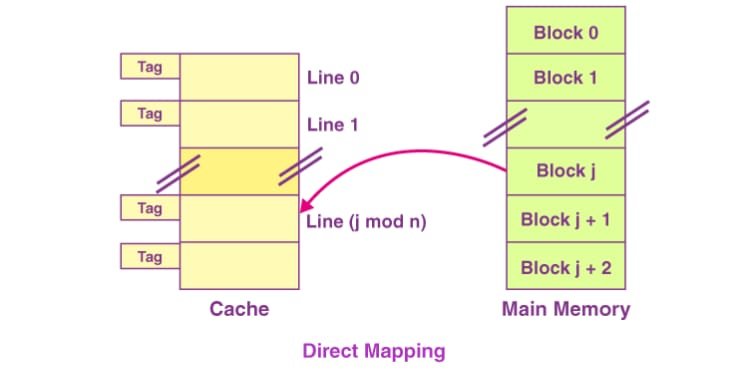

Direct Mapping;

In direct mapping, each block of main memory can only be placed in a specific location within the cache.

It utilizes a modulo function to determine which cache slot corresponds to a particular memory block.

While direct mapping is simple and efficient, it may encounter issues such as cache conflicts when multiple memory blocks map to the same cache slot (known as cache collisions).

The performance of direct mapping directly depends on its hit ratio.

i = j modulo m

where

i = cache line number

j = main memory block number

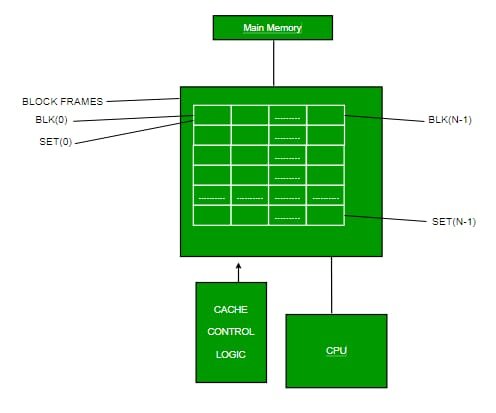

Set Associative Mapping;

With set associative mapping, each cache slot can accommodate a small group of memory blocks, which is more than one but less than the total number of slots. This approach strikes a balance between the straightforwardness of direct mapping and the adaptability of fully associative mapping. It helps mitigate cache conflicts compared to direct mapping, although there may still be occasional conflicts depending on the size of the set.

Relationships in the Set-Associative Mapping can be defined as:

m = v * k

i= j mod v

where

i = cache set number

j = main memory block number

v = number of sets

m = number of lines in the cache number of sets

k = number of lines in each set

Fully Associative Mapping;

In fully associative mapping, any memory block can be placed in any cache slot. This approach offers maximum flexibility, minimizing conflicts within the cache as any slot can accommodate any data block. However, it is more complex to implement and manage compared to direct and set associative mapping methods.

Cache mapping plays a crucial role in optimizing cache performance as it determines how efficiently cache memory is utilized. The choice of mapping technique impacts cache hit rates, access times and overall system performance. The ultimate goal is to find a balance between simplicity and cache efficiency to ensure that frequently accessed data is readily available in the cache, reducing the need for slower main memory access.

Applications of Cache Memory;

Cache memory finds wide applications across various computing systems to enhance performance by accelerating data access. Some key applications of cache memory include;

Processor Caches; Enhancing CPU performance by storing frequently used instructions and data, thereby reducing memory access times.

Web Browsers; Caching frequently visited web pages and their associated assets to expedite website loading.

Database Systems; Caching frequently accessed database records to reduce query response times.

Operating Systems; Caching recently used files and program code to improve overall system responsiveness.